Peppa Pig’s story of torture? Why relatives can’t rest on platforms like YouTube Kids for child-friendly fare

Platforms like Netflix and YouTube Kids can seem like a ideal digital daycare for bustling relatives who conclude a educational and age-appropriate offerings as a proceed to keep their kids entertained and intent while they try to conduct other collection of their chaotic lives.

But as unfortunate calm starts slipping by a cracks of a “kid-friendly” filters, relatives and experts are doubt usually how most we can rest on these algorithmic black box babysitters.

Recently, a New York Times published an article about “startling videos” slipping past YouTube’s filters.Â

The square strew light on worrisome calm appearing in child-safe searches, including creepy knock-offs of Nickelodeon’s PAW Patrol, a preschooler-aimed module about a organisation of problem-solving bruise puppies.

The unfortunate remixes embody altered storylines involving demons, genocide and automobile crashes. They can be troubling for immature viewers who expected event opposite a videos unknowingly as they devour a fibre of clips curated by a platform’s algorithms.Â

“Young children are mostly fearful by remarkable changes and transformations, and so might be quite dissapoint when characters they know and trust are portrayed in unfortunate situations,” says Matthew Johnson, executive of preparation for MediaSmarts, a Canadian non-profit classification that focuses on media education programs.

- ANALYSIS | Is consistent playtime and shade time good for a kids?

- ANALYSIS | When record discriminates: How algorithmic disposition can make an impact

PAW Patrol isn’t a usually kids’ calm being depraved this way.Â

In a viral blog post, author James Bridle drew courtesy to a equally disfigured and unnerving versions of renouned children’s shows including Peppa Pig, in that deviant storylines repurpose a cheery, kid-friendly originals with gore, such as a partial in that Peppa’s outing to a dentist turns into a story of torture.

Because these altered videos are interspersed among thousands of originals and some-more harmless replicas, relatives are, by and large, unknowingly of their existence when they lay their kids in front of a screen.Â

Peppa cooking her dad

As Bridle notes, a hunt for “Peppa Pig dentist” earnings a woe “remix” on a front page of hunt results, right beside a program’s accurate channel.Â

And a frightful dentist partial is usually a tip of a iceberg. These videos are widespread, with other examples including one in which Peppa cooking her father and another that involves drinking bleach.

YouTube has taken stairs to supplement some-more moderation following a publicity.

According to YouTube, it takes a few days for calm to quit from a categorical YouTube height to YouTube Kids. Their wish is that within that timeframe, users will dwindle calm that is potentially unfortunate to children.

In further to filtering out inapt content, flagged calm will now be age-restricted so that users can’t see flagged videos if they’re not logged in on accounts purebred to users 18 years or older.

When Cadi Jordan’s kids were acid Netflix for Power Rangers episodes, a formula enclosed offerings such as After Porn Ends. (Cadi Jordan)

But YouTube’s proceed relies heavily on users flagging a inapt content.

“No complement is ever going to be foolproof,” says Johnson.

He adds that while YouTube’s combined mediation is an improvement, it’s not a ideal solution.

YouTube isn’t a usually provider of kids’ programming unprotected to algorithmic flaws.Â

Just final week, relatives started saying adult calm popping adult in Netflix’s child-friendly hunt results.Â

And while a platform’s pattern avoids a ambuscade of knock-off calm like a remixes of PAW Patrol or Peppa Pig plaguing YouTube, a adult-oriented calm is no reduction unfortunate to parents.

‘We all saw it together’

Cadi Jordan, a Vancouver selling consultant and mom of two, was repelled when she glanced during a shade in her vital room where her kids were acid for shows and saw icons for films with titles including “After Porn Ends” and “Revenge Porn.”

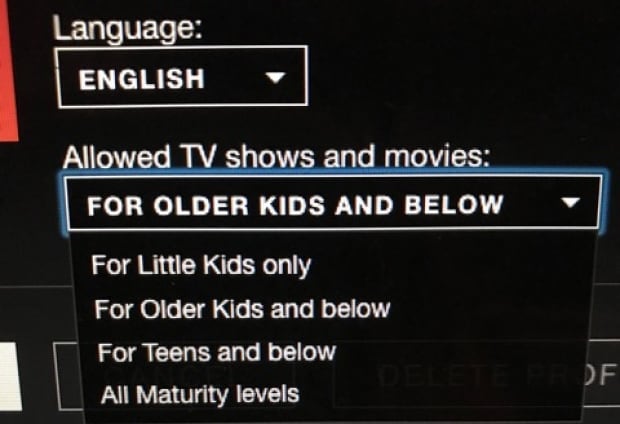

Jordan’s nine-year-old son, whose Netflix criticism is set to “older kids and below,” was acid for Power Rangers episodes and had started typing in a initial few letters of a uncover when a adult titles appeared.Â

“We all saw it together and they looked during me,” says Jordan. “I asked if they had seen this shade in hunt before and they said: ‘No.’ “

In an email to Jordan, Netlix’s corporate resolutions group pronounced a emanate was a outcome of a bug in their hunt complement and they were happy to let her know “our engineers were means to get a repair right divided and a emanate is now resolved.” They apologized for “any annoy this emanate might have caused we and your family.”

Jordan’s nine-year-old has a Netflix criticism set to ‘older kids and below.’ (Cadi Jordan)

Media education organizations like Media Smarts suggest co-viewing, where relatives watch alongside their children.Â

Johnson says that while technical collection can be useful to parents, they’re no surrogate for being actively concerned in a kids’ media lives. “While we should during a really slightest actively curate their media experiences, it’s best to co-view with your kids when possible,” generally since algorithmic calm recommendations can lead to kids saying astonishing things.

“I’m blissful we use Netflix in a categorical area of a residence where we can all see it,” says Jordan, who was as astounded as her kids were to see R and NR rated shows while regulating child-related filters in a app. She used a knowledge to trigger a review about bargain what they were saying on screen.

But for bustling parents, being means to actively watch a likes of a Power Rangers or Peppa Pig alongside their kids can mostly be challenging.

Digital babysitter

After all, partial of a reason for a recognition of a use like YouTube Kids is that it provides a clearly unconstrained charity of presumably age-appropriate and even educational content.Â

Sitting a child in front of an iPad automatic with shows that children adore — and that relatives trust — gives overburdened moms and dads a few changed mins to send critical emails, make cooking or usually change a laundry.

At their best, a child-friendly offerings of digital platforms like YouTube and Netflix seem to offer a purpose of digital babysitter — and even teacher. Many relatives criticism their kids learn to count or even learn difference in other languages from their favourite online shows.

Other users incited adult adult titles in their presumably kid-friendly searches on Netflix. (Ramona Pringle)

But it comes as no warn that algorithms can be flawed. As critics point out, a algorithms that foreordain what appears in hunt formula are still arrange of like black boxes.Â

These strings of formula are automatic to grasp a specific outcome — to beget a limit probable views or to compare viewers with a calm they’re acid for — though a companies deploying them still can’t envision what specific calm particular viewers will see.

Not most accountability

What’s generally discouraging is that when it comes to kids’ content, these algorithms aren’t usually black boxes, they’re black box babysitters, examination over an whole era of kids though any real accountability.

Because of that, “when it comes to safeguarding children from content, we can never rest only on algorithms,” says Colleen Russo Johnson, a children’s media researcher and co-director of a Children’s Media Lab during Ryerson University in Toronto.

“Multiple layers of a tellurian preference routine are required to truly safeguard protected content.”Â

If relatives aren’t means to directly guard their kids as they’re examination radio or online content, Russo suggests they pre-select videos a child can watch.Â

“This takes divided a probability of inapt videos and also ensures relatives know what their child is being unprotected to in general.”Â

As for Jordan, her recommendation to other relatives is to “check your settings frequently and do some searches to see what comes up.”Â

Don’t assume a filters will work a proceed they’re ostensible to or that calm that is deemed by program to be “kid-friendly” will indeed be so. In other words, machines can do some flattering extraordinary things, though they’re still not a best babysitters.

Article source: http://www.cbc.ca/news/technology/childrens-videos-filters-1.4412422?cmp=rss